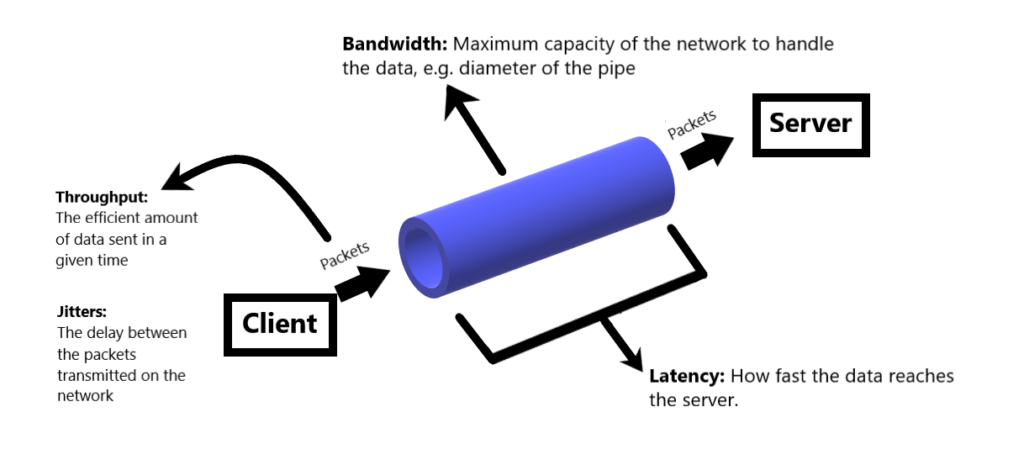

Alright, as a testing engineer it is always important to understand how a webpage loads, not only the web application, any client server application that is connected with intranet or internet requires data to be exchanged between systems, So in order to transmit the data, speed is an important entity, When speed is high, data transfer time is less, When speed is low, time to transit will be higher, which equals to a laggy application right, So how do we calculate the speed then? Yup there is a way, Throughput and bandwidth are the two parameters to measure the speed of the network.

Throughput Vs Bandwidth

Throughput gives you how much data will be transferred from a source at a given time and bandwidth gives you how much data can theoretically be transferred from a source at a given time. So by knowing how both throughput and bandwidth are performing is crucial for any network’s performance.

Again, network throughput refers to how much data can be transferred from source to destination within a timeframe. Throughput measures how many packets arrive at their destinations successfully (e.g. efficiency). throughput capacity is mostly measured in bits per second (bps), also can be translated as data per second.

Successful packet transmission is a key to the high performance inside a network. When people use programs or software, they want their requests to be received and responded to in a timely fashion. Packets lost in transmission lead to slow network performance, and low throughput leads to packet loss.

Latency Vs Jitter

Packet loss, latency, and jitter are all related to slow throughput . Latency is the amount of time it takes for a packet to make it from source to destination, number of hop counts between source and destination can also be the factor for the latency and jitter technically refers to the difference in packet delay (i.e. difference between arrival of first packet and next packet). Minimizing all these factors is critical to increasing throughput speed and data performance.

What is Speed in networking Vs Bandwidth?

Basically, network bandwidth is defined as the maximum transfer capacity of a network. It’s a measure of how much data can be sent and received at a time. Bandwidth can measured in bits, megabits, or gigabits per second.

It’s important to know bandwidth doesn’t actually increase the whole speed of a network. You can increase a network’s bandwidth all you want, but what you’ll end up doing is increasing the amount of data that can be sent at one time, not increasing the transmission speed of the data. Bandwidth doesn’t change the speed at which packets are traveling.

Being said this, bandwidth is still important for network speed. Internet speed, for instance, is allocated bandwidth or the amount of data capable of being sent to you per second. For say, When you are using 100Mbps router, you can receive up to 100 Megabytes of data per second, but not necessarily means you’ll be receiving 100 Megabytes/second every time, it depends on the other factors such as network congestion, bottleneck.

In Short..

- Throughput– Throughput measures how many packets arrive at their destinations successfully (i.e. efficiency).

- Latency– Latency is the amount of time it takes for a packet to make it from source to destination, low latency means high throughput.

- Jitters– Jitter refers to the difference in packet delay (i.e. difference between arrival of first packet and next packet), more the jitter means low throughput and high latency.

- Bandwidth– Network bandwidth is defined as the maximum transfer capacity of a network.

- Speed– Speed is a collective measure of Throughput, Bandwidth, When someone say network speed is good, it means throughput is high in the given bandwidth is what speed in networking.

Read Also : How to do automation testing in a website with different network speed using Selenium?

That’s All folks, see you in the next interesting post, until then